- VOX

- Technical Blogs

- Protection

- Granular Ransomware Detection in NBU 10.4

Granular Ransomware Detection in NBU 10.4

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Rapid detection of ransomware attacks is an essential component of a comprehensive cyber resilient solution. These attacks have the following basic life-cycle: (i) utilize some vulnerability to infiltrate the system, (ii) encrypt files rapidly and blindly using a secure encryption protocol, and (iii) leave behind a breadcrumb trail that can be detected by heuristics. Indeed, most existing techniques of ransomware detection revolve around these bare assumptions.

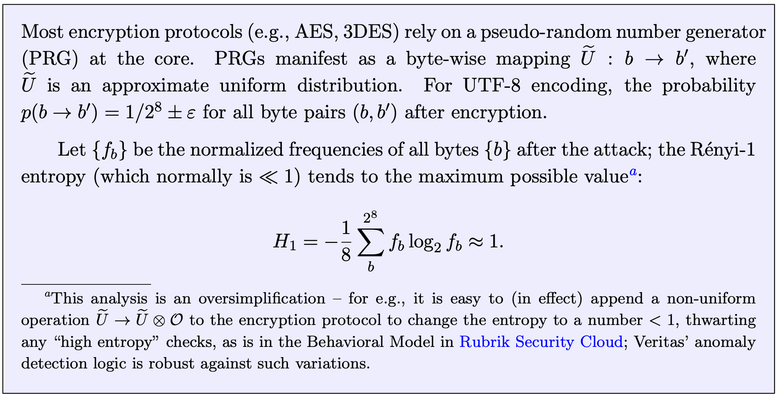

However these assumptions have incrementally broken down: the 2023 threat report from Crowdstrike showed that 71% of attacks are off-the-land attacks, up 16% since 2022. Among the remaining 29% on-the-land variants, several are polymorphic ransomwares, whose crypto-hashes can evolve rapidly with time (HYAS Labs), as shown in the Table below. In this evolving landscape, common techniques like hashes and YARA-rules are increasingly inadequate to reliably detect ransomware.

Other ubiquitous challenges include: (i) SIEM/SOAR solutions failing to detect unfamiliar threats, leading to (ii) an overload of false positives, and (iii) hefty pricing structures. In this blog, we explain how Veritas tackles these challenges to build a resilient and adaptive near-real-time in-line detection algorithm.

Challenge 1: An avalanche of ransomware variants require heuristic-based methods to maintain an ever-expanding database of detailed behavioral patterns, which is an expensive and unscalable affair.

Instead of building complex heuristics, NetBackup solves this problem by identifying underlying patterns in carefully curated features using file metadata – and flagging sudden, unexpected and significant deviations from them using unsupervised Machine Learning techniques. We can break this down into two phases:

- Learning phase: This phase lasts for about 30 days, during which patterns in file metadata are captured as a collection of histograms. As the training phase draws to a close, statistics of the underlying patterns are expected to have been learned. The stable histogram captures the coarse-grained fluctuations in the file metadata. The finer daily fluctuations, expected to be Gaussian distributed about the stable

histogram, are modeled by a statistical measure – the KL Divergence.Progressive states of the baseline histogram as the learning proceeds. It is interesting to note how they slowly converge to stable histogram (in red).

- Action phase: This phase is initiated for assets when the stable baseline criterion is achieved. As the backup is ongoing, we ask the statistical question: "Is the current pattern within the stable histogram + expected Gaussian noise?"

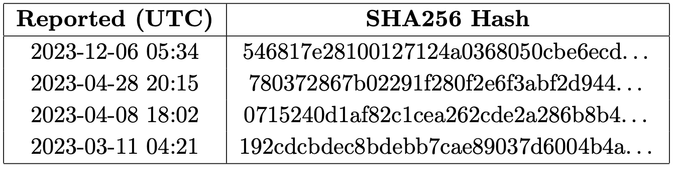

The stable baseline (red) is representative of the benign backup (in green; they strongly overlap). However, the infected backup is not within expected Gaussian fluctuations of the stable baseline.While we refrain from gory mathematical details, let us test how this strategy works in action. When fed into our clustering model (see Fig below), the expected Gaussian fluctuations from the stable baseline – as measured by the KL Divergence – will cluster near zero for benign backups. For infected backups, the patterns can no longer be considered as “fluctuations”, and their KL Divergences and will stick out

as anomalies.

The baselines are incrementally updated forever to stay relevant in the face of potential concept drift (change in underlying assumptions) or data drift (change in underlying statistics). This approach allows for the detection to generalize to potentially infinite ransomware variants, while ensuring that user-initiated, benign changes in data are never flagged.

Challenge 2: As crime organizations transform to provide RWaaS, attacks are becoming ever more evasive to traditional detection methods like honeypots and header checksums. While vendors have incorporated full-content analytics, they suffer from the problems of high overhead leading to slow backups, limited applicability and concept drift.

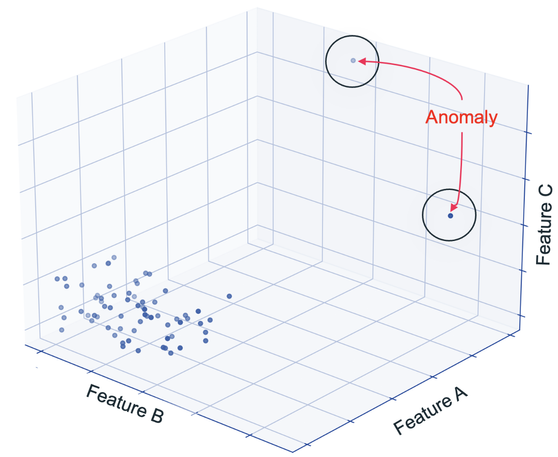

NetBackup solves all the problems above by looking at file content at varying levels of granularity and uncovering hidden patterns or underlying trends. The backbone of this logic is a statistical measure of randomness – called the Rényi entropy – that expresses the byte-level distribution of file content. When files are infected or encrypted by ransomware, their entropy changes; for e.g., encryption of common text files will lead to an increase in entropy – but in general, the change depends on the file type(s), the encryption protocol, and other parameters in the malware code (CCIS paper, v. 1143).

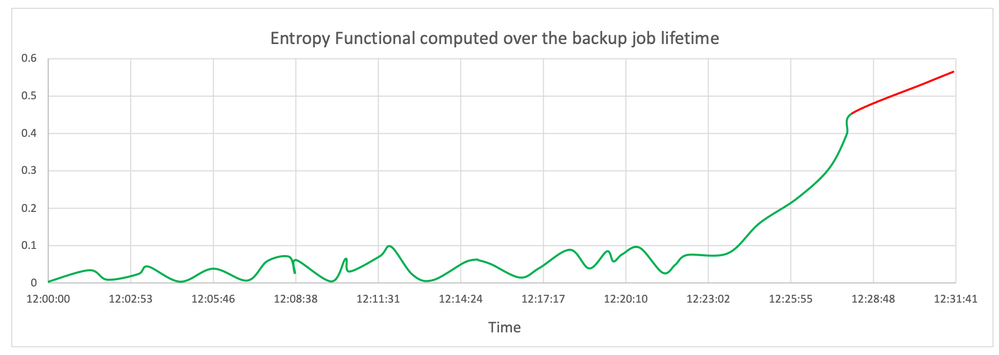

NetBackup computes entropy in-line during the backup process with less than 1% CPU overhead and undetectable time latency, using a patent-pending technique. The entropy values are wrangled into highly non-linear metrics before concurrently ingested by a machine learning model – which detects statistically significant signatures of malicious tampering of file content (see Fig below). This strategy makes minimal assumptions about the attack details, allows generalization to unseen ransomware variants and renders futile any masking or spoofing of “normal behavior”.

Challenge 3: Extensive testing in Veritas REDLab has shown that the Rényi entropy, when applied appropriately, is the strongest indicator of ransomware activity. However, other vendors rely primarily on file metadata tampering, while entropy is relegated to a secondary sanity check – in part due to the difficulty in computing the entropy in-line.

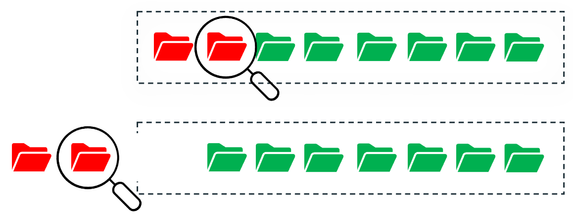

NetBackup takes the (logically right) entropy-first approach by utilizing a patent-pending invention, streamlined to fit our detection pipeline. On detecting a significant level of anomaly, the secondary check on file metadata patterns is initiated. This in-line detection ensures that only pristine data enters the immutable storage, and thereby stays that way – see Fig below. This two-stage pipeline is also designed to minimize false-positives – the current estimate of detection accuracy is 99.94% and the false-positive rate is 0.07%.

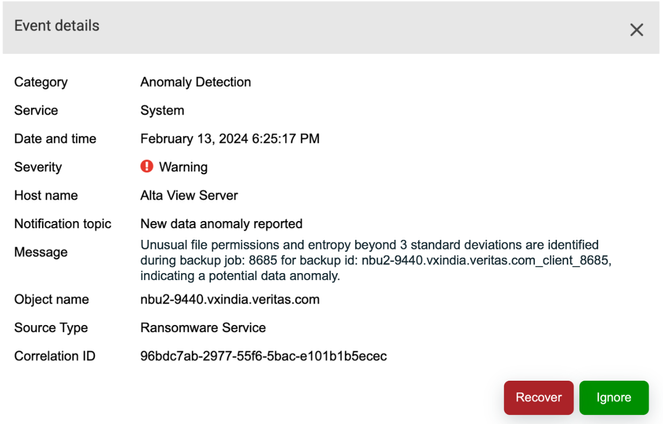

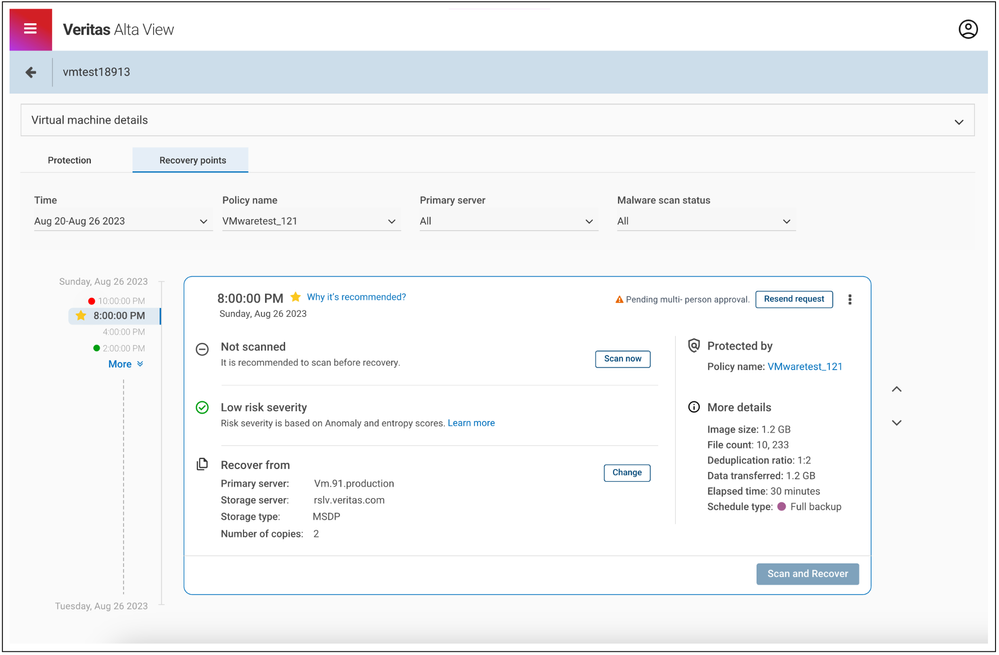

Next steps: In the rare event of an erroneous anomaly alert, the user may report it with a single-click (see Fig below). The feedback mechanism is designed in a way that a “similar” anomaly will never be raised again. A ransomware alert is never a desirable notification to receive. In the unfortunate situation that the need so arises, NetBackup assists the user during the entire recovery process, starting with intelligent automated recovery point suggestions, which meet the user’s RPO and RTO preferences in the best possible way.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.