- VOX

- VOX Knowledge Base

- Availability Knowledge Base

- Articles

- Microsoft’s Shared Virtual hard Disk consumption f...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 07-02-2015 06:43 AM

Introduction

The objective of this white paper is to describe VERITAS Cluster Server (VCS) support for Microsoft¡¦s shared VHDX feature in a Hyper-Virtual environment. To use the information given in this white paper you must have essential knowledge of administrating VERITAS cluster Server

Starting in Windows Server 2012 R2, Hyper-V makes it possible to share a virtual hard disk file between multiple virtual machines. Sharing a virtual hard disk file (.vhdx) provides the shared storage that is necessary for a Hyper-V guest failover cluster. This is also referred to as a virtual machine failover cluster

VERITAS Cluster Server (VCS) supports it and this white paper provides details about using shared VHDX files as shared storage to configure VCS in Hyper-V environment.

The Challenges To A Successful Cluster Deployment

To properly support application-level failover in Hyper-V guests, VERITAS Cluster Server requires direct SAN access using either iSCSI or Virtual Fibre Channel. As a result, one of the more prevalent delays to successfully deploying clustered applications is the proper allocation and configuration of shared storage in a SFWHA environment. In most cases this requires either specific SAN expertise or a degree of patience while your operations team responds to the storage configuration request

About VERITAS Cluster Server

VERITAS Cluster Server (VCS) connects multiple, independent systems into a

Management framework for increased availability. Each system, or node, runs its own operating system and cooperates at the software level to form a cluster. VCS links commodity hardware with intelligent software to provide application failover and control. When a node or a monitored application fails, other nodes can take predefined actions to take over and bring up services elsewhere in the cluster.

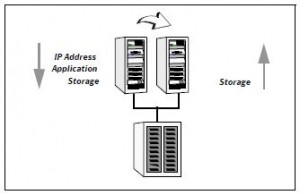

Figure 1

When VCS detects an application or node failure, VCS brings application services up on a different node in a cluster. VCS virtualizes IP addresses and system names, so client systems continue to access the application and are unaware of which server they use. For example, in a two-node cluster consisting of db-server1 and db-server2, a virtual address may be called db-server. Clients access db-server and are unaware of which physical server hosts the db-server.

VCS offers efficient real-time fault detection with Intelligent Monitoring Framework. It uses an event-driven design that is asynchronous and provides instantaneous resource state change notifications. A resource state change event is quickly detected by VCS agents and then communicated to the VCS engine for further action. This improves the fault detection capability significantly allowing VCS to take corrective actions faster and that results in reduced service group failover times.

About External Data Storage

One of the main tenets of clustering is the ability to restart a failed application service on an alternate or passive target node. The key component to ensuring this functionality is the existence of a shared storage resource, typically allocated from a SAN-based storage array. Shared storage allows for the uninterrupted access to the requisite data files needed for an application to be brought online. It is because of this requirement that applications in a cluster are free to run only on systems with the aforementioned storage visibility. This model however does not stipulate that all storage be shared. A common configuration option would be for SQL Server binaries to reside locally on each cluster node while the database files themselves would be resident on a shared location

Cluster configurations with shared storage

- Typical cluster configuration with basic shared storage

Typically, in a cluster configuration with basic shared storage, all the cluster nodes access the storage device over a SAN. The application may run on any of the cluster node. However, the application data (database tables, redo logs, control files and so on) is stored on the shared disk. In the event of a failure, the application starts on the failover cluster node. Since the application data is stored on the shared disk, the failover node continues to access it without any downtime.

Figure 2- shows Sample cluster configuration with basic shared storage

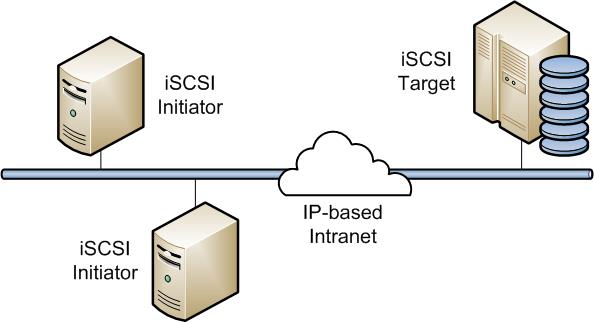

One could choose to use shared storage using iSCSI protocol over ip or could go for VFC in Hyper-V

Environment.With VFC( Hyper-V virtual Fiber Channel feature), you can connect to Fiber Channel storage from within a virtual machine. This requires fiber channel investment in cables, and NPIV-enabled (SANs). Also the hosts are required to have special HBA driver that supports virtual Fiber Channel. The typical storage configuration for VFC is as shown in the Figure 3.

Figure 3

iSCSI enables the transport of block-level storage traffic over IP networks. It uses standard Ethernet switches and routers to move the data from server to storage. Prerequisite for it to work is, to configure iSCSI initiator on all the Virtual machines to communicate with iSCSI targets on Storage Device. Refer Figure 4.

Figure 4

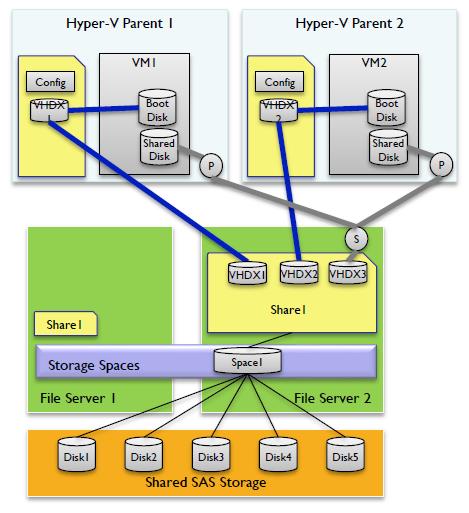

- Typical cluster configuration with shared VHDX disks

Added as part of the Windows 2012 R2 update, shared VHDX files have made our life much easier. Arguably the most relevant time-saving feature for cluster configuration on Windows 2012 R2 , VCS can now be deployed using shared VHDX files on top of CSV¡¦s (Cluster Shared Volumes). Windows Server 2012 R2 provides the ability to use a shared virtual hard disk of the VHDX variety between multiple virtual machines. In this article we will provide details and insight into the VHDX format but more importantly how it can be used as the underlying shared storage layer necessary to establish a VCS cluster between Hyper-V guests. Upon completion of this document you will be able to configure cluster-aware applications between these virtual machines, as supported by SFWHA.

The use of shared VHDX files works in this scenario due to their support for persistent reservations.

This capability in turn provides the method for resolving dynamic contention and transfer of storage LUNs between cluster nodes during a failover operation. SCSI3 persistent reservations enable multiple cluster nodes to coordinate ownership of each disk and therefore allows for transitioning said LUN(s) -and subsequent VHDX files- from the active node to a passive node in the cluster.

The shared virtual harddisk for VERITAS cluster Server (vcs ) can be deployed using following two ways :

a. Cluster Shared Volumes (CSVs) on block storage (including clustered Storage Spaces).

b. Scale-Out File Server with SMB 3.0 on file-based storage.

Configuring an SFW HA cluster using VHDX files as shared storage

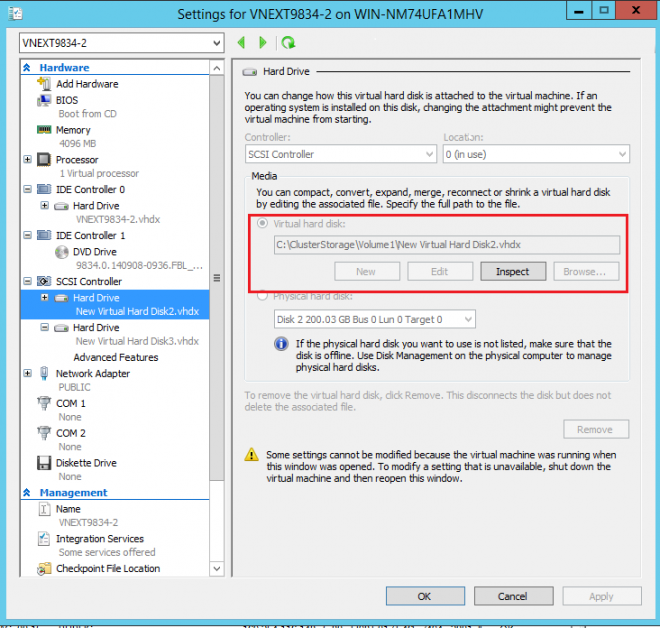

a. About Creating and enabling a shared virtual hard disk

This section describes how to create and then share a virtual hard disk that is in the .vhdx file format. Repeat this step for each shared .vhdx file that you want to add

To create a virtual hard disk to share

1. In Failover Cluster Manager, expand the cluster name, and then click Roles.

2. In the Roles pane, right-click the virtual machine on which you want to add a shared virtual hard disk, and then click Settings.

3. In the virtual machine settings, under Hardware, click SCSI Controller.

4. In the details pane, click Hard Drive, and then click Add.

5. In the Hard Drive details pane, under Virtual hard disk, click New.

The New Virtual Hard Disk Wizard opens.

6. On the Before You Begin page, click Next.

7. On the Choose Disk Format page, accept the default format of VHDX, and then click Next.

Note

To share the virtual hard disk, the format must be .vhdx.

8. On the Choose Disk Type page, select Fixed size or Dynamically expanding, and then click Next.

Note

A differencing disk is not supported for a shared virtual hard disk.

9. On the Specify Name and Location page, do the following:

10. In the Name box, enter the name of the shared virtual hard disk.

11. In the Location box, enter the path of the shared storage location. For Scenario 1, where the shared storage is a CSV disk, enter the path: C:\ClusterStorage\VolumeX, where C:\ represents the system drive, and X represents the desired CSV volume number. For Scenario 2, where the shared storage is an SMB file share, specify the path: \\ServerName\ShareName, where ServerName represents the client access point for the Scale-Out File Server, and ShareName represents the name of the SMB file share.

12. Click Next.

13. On the Configure Disk page, accept the default option of Create a new blank virtual hard disk, specify the desired size, and then click Next.

14. On the Completing the New Virtual Hard Disk Wizard page, review the configuration, and then click Finish.

b. To share the virtual hard disk

1. In the virtual machine settings, under SCSI Controller, expand the hard drive that you created in the previous procedure.

2. Click Advanced Features.

3. In the details pane, select the Enable virtual hard disk sharing check box.

4. Click Apply, and then click OK.

Note: If the check box appears dimmed and is unavailable, you can do either of the following:

- Remove and then add the virtual hard disk to the running virtual machine. When you do, ensure that you do not click Apply when the New Virtual Hard Disk Wizard completes. Instead, immediately configure sharing in Advanced Features.

- Stop the virtual machine, and then select the Enable virtual hard disk sharing check box.

5. Add the hard disk to each virtual machine that will use the shared .vhdx file. When you do, repeat this procedure to enable virtual hard disk sharing for each virtual machine that will use the disk.

Repeat above procedure for the number of virtual machines which will be participating in vcs configuration. Inside the virtual machine you will see this as regular disk in diskmgmt.msc. You could right away start using this disk as shared disk

Advantages:

1) Supported storage back-ends for shared VHDX is SAS, FC, iSCSI, SMB, Spaces. Virtual FC supports FC SAN whereas iSCSI to Guest supports only iSCSI SAN

2) Does not require specific hardware on Hyper-V host

3) If you want to provision multiple in-guest clusters in Hyper-V environment, each needed its own shared LUN. With VFC it is complex as involves Fiber channel investment. CSV enables you to create a big LUN where many VM¡¦s data disks can reside. This increases environmental stability and makes it easier for administrators to provision N number of in guest clusters. VERITAS Cluster supports 64 node clusters. For such a large deployments shared VHDX is the best option

4) Supports Failover scenarios Hyper-V Guest failover, Hyper-V host failover , File Server failover and also works when CSV is moved or VM ¡¥s are moved .

5) SMB 3.0 brings enterprise-class storage to Hyper-V deployments. Very easy to configure file share 7.SMB 3.0 file shares are on different physical infrastructure and can be permissioned for more than one host/cluster. Therefore, in theory, a guest cluster could reside on more

than one host cluster, with the Shared VHDX stored on a single SMB 3.0 file share you have a solution to quadruple HA your application

6) Useful for SFWHA Stretch Cluster configurations i.e Campus cluster or RDC .

7) Gets Benefits of storage pooling capabilities if configured optionally

Infrastructure checklist

Following are the requirements needed in the environment in order to make the solution work.

For Hyper-V hosts

1) Must be running Windows Server 2012 R2

For Guests (Virtual Machines)

1) Windows Server 2012 or 2012 R2 are supported

2) Install the Hyper-V Integration Components

Limitation with shared VHDX

1) Cannot do host-level backups of the guest cluster

2) cannot perform a hot-resize of the shared VHDX

3) Storage Live Migrate the shared VHDX is not supported

Summary

The shared VHDX feature in Windows Server 2012 R2 gives IT staff a new, less complex, and lower cost method to deploy guest failover clusters for cluster-aware applications with high-availability requirements. With the selection of either CSV architecture to store shared VHDX files, you can abstract the underlying storage system from the guest virtual machines failover clusters without sacrificing the ability to deploy robust, reliable, and high-performance infrastructures.