- VOX

- Technical Blogs

- Backup Exec

- How AI has improved the efficiency in identifying ...

How AI has improved the efficiency in identifying real product issues

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The evolution of agile methodology forced enterprises to innovate and deliver at lightning speed. While the delivery cycle time is decreasing, the technical complexity required to provide positive user experience and maintain a competitive edge is increasing — as is the rate at which we need to introduce compelling innovations.

To meet the continuous integration and delivery needs, we turned to continuous automated testing. A preflight suite of 2000+ tests is automated to validate the codebase (Trunk) stability at all times.

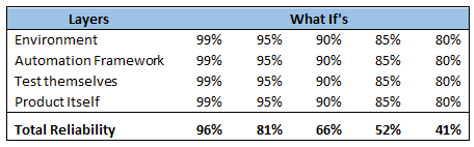

While automated testing saves time, a lot of time goes into analyzing the failures. When the product under test has different deployments and complex features, the automated testing needs to deal with various layers. Commonly, it includes the environment on which tests are getting executed, the automation framework that is used for automation, the tests themselves as they are written with certain assumptions, and the product itself, which is changing.

As can be seen in the table above, the reliability of each layer has a cumulative effect on the reliability of the automation results. In our case, if we consider each layer is 95% reliable, then the reliability of the overall automation results comes to 81%. This means there are approximately 380 tests (19% of 2000+ tests) that fail due to inconsistency in these layers. Analyzing this many failures is time-consuming. Additionally, some of these get reported as product defects and consume bandwidth furthermore before resulting in these as “nonreproducible," “open in error,” etc.

In Backup Exec, we realized the need to address this inefficiency. With multiple parameters being monitored & recorded in each automated run, we had enough data to build the predictive model. The objective was to predict with certain confidence/probability of whether the test failure is a product failure or not. Therefore, efforts are being spent only on those failures that matter most.

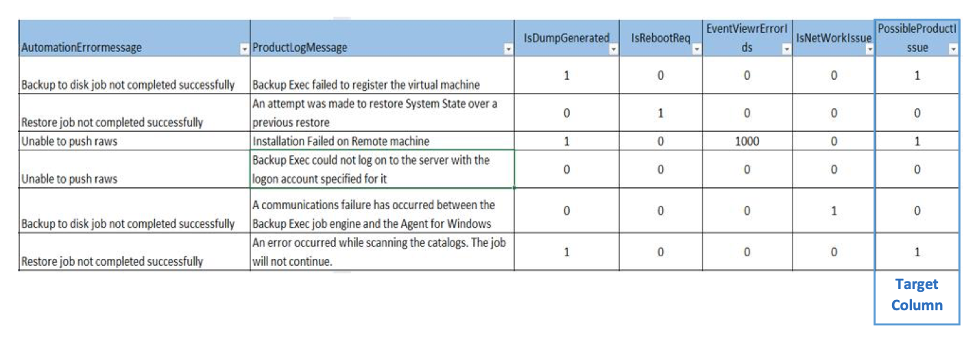

With the inputs from Dev, QA SME, and statistical analysis techniques, a list of parameters that affects test results have been identified – the below table lists some of these parameters. This also includes the last column “PossibleProductIssue” indicating the outcome of tests resulted in product issue (1) or not (0).

The data was then passed to relevant Machine Learning algorithms to train and test, and the one that provided optimal accuracy - namely Random Forest - was used to build the prediction.

The prototype provided predictions with 85% accuracy (i.e. 323 out of 380 failures), saving a lot of time on a recurring basis. The next step is to incorporate this into the automation framework and start using it!

This is an example of how AI is making modern development practices more intelligent & efficient.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Cloud Scale with NetBackup 10 and Amazon Web Services in Protection

- Deliver Operational Efficiency and End-To-End Resilience with NetBackup Flex 2.1 in Protection

- Make data-driven decisions about your cloud migration strategy in Insights

- Veritas Named a 2020 Gartner Peer Insights “Customers’ Choice” for File Analysis Software in Insights

- Optimizing cloud adoption: Unified deduplication with NetBackup MSDP Direct Cloud Tiering in Protection