- VOX

- Insights

- Data Insight

- Re: Can the files in the \Data\Classification\cont...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Can the files in the \Data\Classification\content folder can be deleted manually?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-27-2017 06:09 AM

Hi

I am classifying files from a 3 TB share and in hindsight probably should have selected a small set of data as it seems to not be purging the files out before trying to process more.

The drive has 400GB space but has reached the max threshold set (10GB) and therefore processing has stopped. Most the data is here…

D:\DataInsight\Data\Classification\Content\14…

Can I just delete the files in this folder manually or is there a better course of action? A setting I have missed maybe? I cannot realistically keep expanding the drive size!

Not sure it's relevant but I am not using the Windows Filer agent

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-27-2017 01:01 PM - edited 04-16-2018 11:12 AM

TTree:

Deletion will result in failure of the request. When the files are completed they should be deleted and removed from the temporary location. It is very probable that the network transfer speed would exceed the speed at which files can be opened and traversed while comparing to all active policies. It is imperative that you have sufficient space to contain the classification request's dataset.

While continually adding disk space is not desirable there is an alternative.

By default, the location for storing file content while it is being classified is C:\DataInsight\data. To modify the location, set the following custom configuration properties on the Settings > Inventory > Data Insight Servers > Classification Server > Advanced Settings page > Set custom properties:

̶ Property name: classify.fetch.content_dir

̶ Property value: The directory name. such as F:\DIcontent

Note: To protect the content, Veritas recommends encrypting the folder.

Note: Service restart is required for the change to the directory to take effect.

Example:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-28-2017 04:11 AM

Hi Rod

Thanks for that information. It does seem that I perhaps selected too much data to classify in one job. Do you have any guidelines on roughly how much temp space it's going to need based on how much is selected to classify? For example, I selected 3TB, would this need 1TB of temp space or the whole 3TB? Can't the process do it in chunks?

I've asked the customer to raise the disk temporarily to 900GB but it sounds like it might be best for me to cancel the current task and do it in smaller jobs if it still can't complete.

The data directory we've selected is on a separate drive to the DI install (d:\ rather than c:\) but this obviously has lots of other items in their too. It probably won't make much difference to my current scenario as it will still run out but is it still good practice to move the 'classify.fetch.content_dir' as you mention?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-28-2017 06:32 AM

TTree, one can also stop the network transmission and allow the classification engine to catch up. Basically you set a threshold for stopping transfer then pick it back up when you reach a disk free threshold. This means typically you are operating within that band for the duration of the request but it will not force you to cancel or reduce the request.

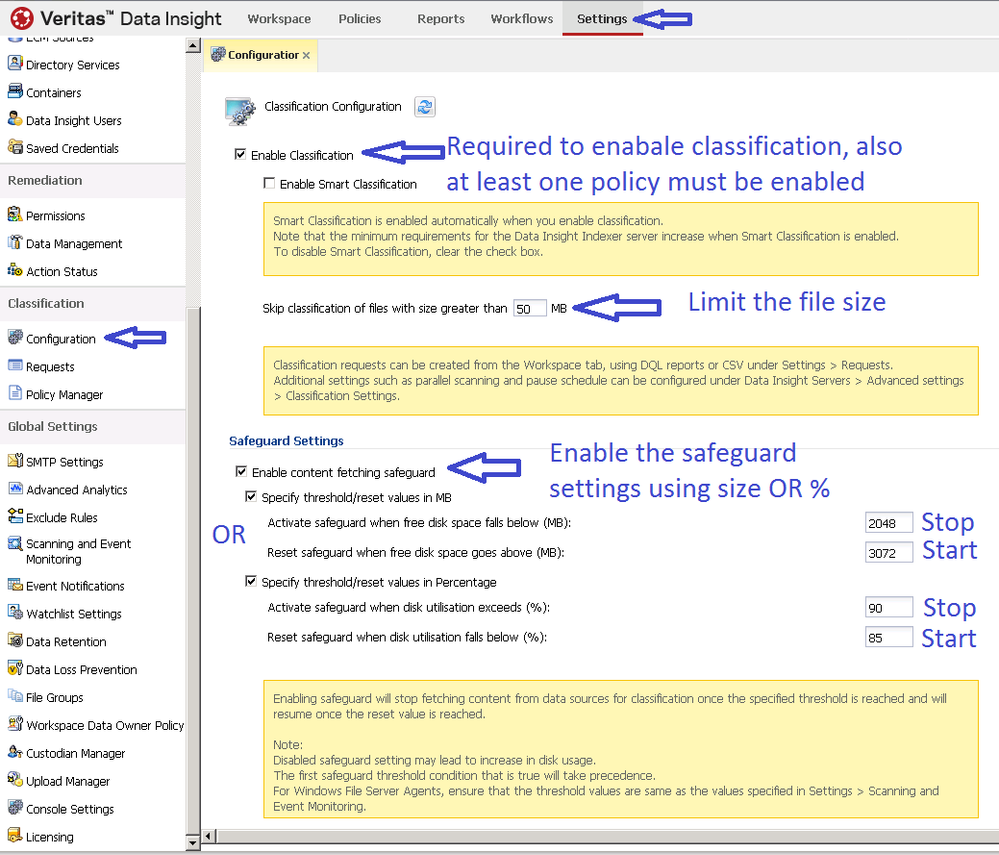

You can achieve it by using the classification settings:

You set the point at which to stop network transfers and after classification has deleted files and reaches the free space goal in MB or percentage of free space the transfers will begin again. It is set under the Settings > Classification / Configuration page as in below.

You might want to also consider that option versus stopping the request, reducing its' size and reinitializing a new request.

Rod

Note:

|

Select the check box to monitor the disk usage on the Classification Server node, and to prevent it from running out of disk space by implementing safeguards. |

|

|

Specify threshold/reset values in MB / Specify threshold/reset values in percentage |

You can specify the threshold for disk utilization in terms of size and percentage. The DataInsightWatchdog service initiates the safeguard mode for the Classification Server node if the free disk space falls under the configured thresholds. The DataInsightWatchdog service automatically resets the safeguard mode when the free disk space is more than the configured thresholds. You can edit the threshold limits as required. If you specify values in terms of both percentage and size, then the condition that is fulfilled first is applied to initiate the safeguard mode. |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-28-2017 07:44 AM

TTree -

Advice on the estimation of the space or time needed is often difficult to dole out in a generic one fits all kind of statement. The process is dependent on various types of resources across multiple nodes and the network. Planning is often our best gage for success.

Even in cancelling, a running request requires some consideration as the in memory processes for each node must be considered, unloaded, and committed informing the master of completion so as to allow the process of transfer and classification to quiesce. All jobs related to classification will delete files related to the "canceled request" from the inbox before it picks up a new task, meaning issuing a new request to replace the cancelling one will not be instantaneous.

While this is a complicated new feature there are resources to assist you in deciphering the configuration requirements to meet your goals. The OLH (OnLine Help) does provide detailed steps and explanations in some of the areas you are pursuing; a good starting point when run on the Management Server itself or a browser with access to the MS and its' name replacing localhost < https://localhost/symhelp/ShowInTab.action?ProdId=DISYMHELPV_6_1_CG&vid=v125104444_v125239294&locale=EN_US&context=DI6.1 >.

There will also soon be a 6.1 Administration course coming from Veritas Education that has a complete section on classification.

Combining the ability to throttle the speed with which transfers are hitting the disk, directing classification to use a fast large disk, unchecking the pause feature where desirable and feasible will all provide you with tools to enable planning the classification of your customer's data in concert with maintaining the health and operation of your Data Insight environment.

I hope that helps you out TTree. I am enjoying researching your enquiries.

Rod

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2017 01:47 AM

Hi Rod

Thanks for all this information

I have got those thresholds set but it didn't move on for 10 days so something is not quite right. Maybe it's got stuck on a particularly big share/files. One thing I will definitely reduce before cancelling and splitting the job into smaller chunks is the 'Max file size' to classify. Someone at Veritas EMEA was quite bullish about setting the max file size to a GB. I will reduce that and see what happens as this just doesn't make sense to me.

I'll try and keep an eye out for that 6.1 Admin Training as I definitely want to get myself on that!

Thanks again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-07-2017 08:47 AM

Hi again Rod

Back on-site with the customer. I cancelled the big job - it appears to be running out of space on the 3 largest shares. Started one of the shares, after 2 hours it appears to have copied over exactly the size of the data to the temp area but doesn't appear to be processing it for 'classification' (195GB).

I've studied the documentation and tried to raise the threads on the classification component (from automatic) to 10 but it doesn't appear to make that any difference. However, could that be because I've already started the scan? The machine is a single node with all components on it (incl. the classification).

Even before it copied everything it didn't appear to be using much of the 16 cores (10-15% CPU max) or 32GB RAM (8GB used) Could this part be restricted to the network bandwidth, as mentioned earlier? The servers are all local to each other so should be pretty good (will ask exact network details).

Anything else I could try to speed it up now it's copied over or do I have a potential issue perhaps?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-09-2018 10:21 AM

Sorry Ttree I did not see the response back to yourself. This is a month old and I assume you have rectified the situation already but as a followup on the issue of not seeing progress on classification when an Administrator checks the job status I have found that many miss the setting that instructs the classification to pause on the Winnas device. Our Veritas knowledgebase has an article on the classification pause function that can walk an Administrator through the process of confirming if pause is enabled or utilizing the feature for functionality as it is designed.

Let me know if there are further questions requiring our attention.

Rod

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2018 01:44 AM

Thanks Rod, no worries, I understand!

Yes, I got through this issue by just reducing the amount I attempt to scan at any given time. Seems to be happy and I haven't run into the same issue again.

I'm working on another customer project at the moment and have a question around 'false positives' on MSG files and the UK PII policy. I'll start at new thread though!

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2018 06:46 AM

Sure, I'll watch for it.

I know we did patch Turkey's in 6.1HF3 (click on Data Insight under https://sort.veritas.com/patch/finder and scroll down).

I can research your issue when the question is posted.

Rod

- What is the expected behavior during Indexer Migration in DI? in Data Insight

- Aptare Integration with ServiceNow and Incidents in NetBackup IT Analytics

- mass delete of "windows File server agent" in Data Insight

- Isilon Access /Smart zoning in Data Insight

- can we run any reports to pull the details of all the historical cases which are also deleted? in Discovery Accelerator