- VOX

- Technical Blogs

- Enterprise Data Services Community Blog

- Disk-based data backup and tape storage based reco...

Disk-based data backup and tape storage based recovery-Get best out of your tape drives.

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Respected forum members,

This is my first ever thread. I have tried to make it as informative as possible.This blog is inspired by Curtis Preston's blog .http://searchdatabackup.techtarget.com/tip/Tape-backup-best-practices-How-to-improve-tape-storage-performance but not copy/paste job.I have used only headings from that article.

Tape is not going to go away as much as I like to throw it out.So to get best out of Tape drives in Netbackup based environment with Disk staging,please have your Architect hat ON and procced furthur.

Understand the limits of your tape drive

LTO-4 tape drive's maximum native (uncompressed) transfer rate is 120 MB/s. LTO-4 Drives we used had about 128MB of SRAM as cache. More data you are able to send to the drive cache more faster your drive is going to perform.

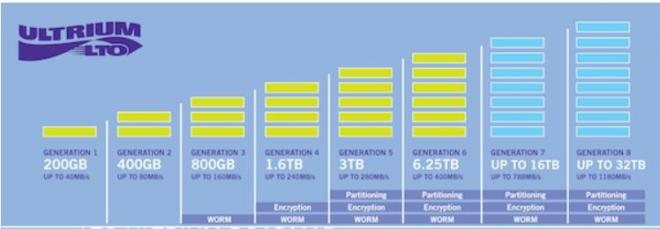

Below table gives you speed and capacity numbers for each LTO drive type and media.My goal was to achive 240MB/s in my environment on LTO-4.This is not an easy task, you will have to take Curtis Preston's points as well as look at symantec's tuning guide.

Know the source: data size and pipe and compression rates

Most of the Database transaction and Archive logs tend generate too small files, which can negatively affect the RPO/RTO as modern tape drives work at the same speed as the data size until it hits maximum sealing on 240 MB/s.So make sure you involve your DBA when designing the backup infrastructure and backup policy standards.

Some data compresses .I got 2.2 TB on single LTO-4 media,I have seen best compression in SAP followed by Oracle Database backups then SQL,NDMP.

Know the data path

What type of network you have for your media server a 10 Gb network ?

We have moved to 10G network backup network to aviod saturating NIC on the media server.

Move had positive impact in the way reduced backup failures as well as reduction in the data fragmentation in the disk(DSSU) thus allowing upto 160MB/s of writing speed even for uncompressed data Also tape drives did achieve compression over 2 TB per tape.These numbers are way more than manufacturer's numbers.

Internal to the server, giving dedicated fiber paths to your tape drive

Best practice is always to separate the Disk and Tape functions on HBA cards, but we understand the benefits of having both running on one HBA card.

The rule of thumb is to separate, but with today’s technology, you can theoretically have a disk port and a tape port on the same HBA since they both require initiators. For example, a SAN Client HBA can only do SAN Client as the entire card is either initiator mode or target mode.

While an advantage is saving money and simplicity by pushing both processes down the same card, there are disadvantages in doing so.

Disadvantages:

HBA Reset

If an HBA card needs to be reset for any reason (there can be many), it will affect both disk and tape operations with slowness or complete failure. Separating tape and disk to their own zone will avoid one affecting the other for a larger scale outage.

Performance

An 8 Gb/s HBA can hit theoretically speeds of 1063 MB/s. That is suitable for High Performance Tape Drives and Disk Drives. What happens most of the time is that bandwidth is maxed out with just disk reads and tape writes. This can have serious performance degradation as most architecture designs need to take into consideration the real world saturation levels.

Another thing to think about is analyzing the capacity so that you can properly zone the right amount of FC tape drives per HBA to meet your backup window you are trying to meet. It is recommended not to configure more than 4-6 LTO-4 tape drives per 8Gb HBA port and as well as distribute the tapes across all available tape-zoned HBA ports.

Avoiding hot spots in DSSU volume by having volume spread across multiple disk helps to achieve better performance.Choosing latest X86 servers over legacy servers has it's advantage.My test servers was Del R 710 with 24 core and 71GB memory with two duel port FC card with one 10G port.

Having more memory at your disposal helps,latest DDR-3 memory helps faster data transfer between CPU and memory.

Know the backup application

Need to know litle insight into backup application performence tuning,here we use Netbackup. What you see below is my reserch in optimizing buffer management task in Netbackup.

Most of the tuning guides will talk about doing trial and error method to arrive at buffer numbers for NUMBER_DATA_BUFFERS in backup environement.

After reading below lines you will notice that you can keep any value from 32-2048 and still achive zero or near zero wait number.

Keeping the SIZE_DATA_BUFFER as 512kb I tried to put more NUMBER_DATA_BUFFERS_DISK. tried 32Kb first Wait counters were near zero,more data you push in to the buffer it should be better thruput But, Netbackup wait counters will not agree with you easily.

Disk staging is the key component enterprise backup standards.Since the migration to new LTO-4 drives lack of performance was evident as we used to see similar numbers as older LTO-2 drives

As the new generation of LTO-4 drives provides good 128 MB of drive buffer, increasing the rate/size of data sent to drive should result in optimal performance.

This was done by increasing the backup application buffer to higher number

where the disk reader and tape writer interact

but,when this setup is tested it created serious lag for medium and large files where either disk reader or Tape writer spent long time to fill or empty the buffer. Symantec’s recommendation on tuning is to adjust the number of data buffers so that, fill up wait counter and empty wait counter’s number becomes zero or closer to zero.

But practically it was found to be difficult to set/tune to a number as number of data buffers to achieve the near zero wait count numbers.

I wanted set 1024 buffers that would mean i am trying to push about 512MB of data to shared memory (SIZE_DATA_BUFFER*NUMBER_DATA_BUFFERS_DISK*MPX) But I was getting serious lag in performence as wait counter numbers were astronomical!!

We tried to create a memory cache for data that might be needed before hand.The read ahead algorithm detects that a file is being read sequentially by looking at the current block being requested for reading and comparing it to the last block that was requested to be read. If they are adjacent, then the file system can initiate a read for the next few blocks as it reads the current block.

This results in large and few I/O reads from diskstaging storage, since the next block required by the application disk reader process is already cached in the server’s memory,data transfer rate is at the speed(DDR-3) at which memory can work.

This results in having higher buffer numbers like anywhere from 512 to 2048 , instead of 64 buffers what Symantec recommends in netbackup tuning guide and still be able to achieve zero and near zero wait times to fill or to empty the application buffers.

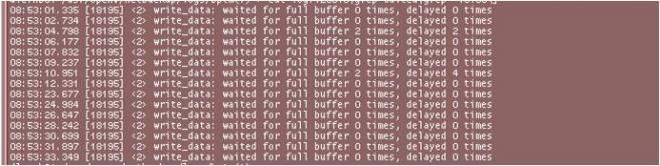

Below Picture shows the evidence of zero wait time to fill the buffer.

Above one is for disk reader process and below one tape writer process in Netbackup.

Above picture was feast to my eyes as , I got zero or near zero wait times for the duplications or de staging to tape media from staging disk.

Below is the hi level architecture diagram i could come up with. Note that i am only dipicting destaging or duplication from disk to Tape media.

Read prefetch cache is the layer which does the magic of eiminating all the delay.

Other considerations,

Memory based IO is quicker than Disk based IO, by pre fetching the data to the memory using Volume manager’s read ahead logic wait counters can be brought down to zero or near zero numbers for any data type. Solution also helps to get few but larger data from the storage rather than many small data thus helping reducing number of IOPS on the storage too.

Here is result of my experiment

Small data(<1MB) No wait times from 32 to 2048 buffers and performance same as incoming data size

Medium sized data(1MB-100MB) ) No wait times from 32 to 2048 buffers and performance same as incoming data size

Large data (>240MB) No wait times from 32 to 2048 buffers and performance went over 300MB/s for LTO-4 drive!!

Tape drives are becoming faster with advancement of the technology.

If we one need to take advantage of the faster drives data needs to be fed to the drive at speed at which drive expects to provide optimal performance. Since backup application alone cannot scale up, data prefetch and memory based IO become necessary.

How did I enable read prefetch?

The VxFS file system uses a parameter read_pref_io in conjunction with the read_nstream parameter to determine how much data to read ahead(prefetch). The default readahead is 64KB. This parameter was set to 16/32 MB during the experiment.

The parameter read_nstream reflects the desired number of parallel read requests of size read_pref_io to have outstanding at one time. The file system uses the product of read_nstream multiplied by read_pref_io to determine its read ahead size. The default value for read_nstream is 1.This was set to same as number of columns of the striped disk i.e value of 10.

discovered_direct_iosz: When an application is performing large IOps to data files, e.g., a backup image, if the IO request is larger than discovered_direct_iosz, the IO is handled as a discovered direct IO. A discovered direct IO is unbuffered, i.e., it bypasses the buffer cache, in a similar way to synchronous IO.This will reduce performance because the buffer cache is intended to improve IO performance. This value was set larger then 10GB(bigger than total data pushed via shared memory) , so that all the backup images do not use direct I/O while reading medium/large images.

You will have to save these settings in (/etc/vx/tunefstab),I would encorage some one to try tunning MAXIO parameter in Veritas foundation suite and report the gains.

Avoid direct to tape

There is no way one can send data over 1Gbps LAN to saturate even a LTO-3 Tape drive,if you do the calculation max a 1Gbps network can send is 128 MB/s which way less than 160 MB/s what LTO-3 needs.

If you are buying latest generation tape drives and still on a decade old LAN,what is point in buying a new drive or media?

Fox example 1.8 TB of SAP DB backup takes 8 hours to write a disk using 1Gbps network but it just take 2:05 hours to transfer it to tape.Directly sending the same data to tape takes over 16 hours to complete.We effectively saved 6 hours of duty cycle for drive and cpu time for server.

Tape is going to be around for a while,Unless disk based backup solution becomes norm.

Disclaimer : HBA insight is by Ken Thompson of Symantec.

Author: Raghunath L

DataProtection team

Texas Instrumants,Chaseoks blvd,

Plano, TX

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Granular Ransomware Detection in NBU 10.4 in Protection

- Power-up ransomware resiliency with retention lock/WORM in Protection

- Role-based Access Control (RBAC) in Backup Exec in Protection

- Trust your Backup Image with Backup Exec Malware Scan in Backup Exec

- Securing Data and Infrastructure: A Comprehensive Approach in Protection