- VOX

- Technical Blogs

- Insights

- Are machines better than humans? An (almost-real) ...

Are machines better than humans? An (almost-real) case study.

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Given the explosive coverage of machine learning and artificial intelligence (AI), it is worth pointing out that the eDiscovery software industry in general and Veritas eDiscovery Platform are among the first success stories of supervised machine learning approaches. Our machine learning features have been in market since 2012 and have been used successfully in regulatory investigations and some high-profile litigations*: In our assessment, machine learning in compliance and legal eDiscovery contexts is already mainstream and adoption should accelerate, given the growing awareness. In this article, we summarize the results of our first-hand experience playing investigators using our machine learning features on the infamous Enron dataset. If you are an “old hand” at machine learning, treat this as a refresher. If you are considering using machine learning in compliance and investigation scenarios, this article would help you appreciate the benefits.

A few stats on the cost and ability of humans in compliance and legal review scenarios will help set context. Human reviewers cost anywhere between $25-$300 per hour – contract/junior lawyers at the low end; senior lawyers and subject matter experts at higher end of the range. The fastest legal reviewers can review and assess relevance at the rate of 100 documents per hour. Putting this together, we estimate that the cost of human compliance/legal review is about $1-$1.75 per document for small documents and $4-$7 per document for large and complex documents. Comprehension and its effect on accuracy is another matter altogether. Academics estimate that compliance/legal reviewers achieve about 36% accuracy versus 80% for machine-assisted review.

For our case study, we played the role of regulators investigating the much-maligned Enron Corporation, which died of unnatural causes in 2003. Among the long list of Enron wrong-doings, the company was investigated by the Federal Energy Regulatory Commission (FERC) for its role in the wholesale electricity market in California. Briefly, the State of California deregulated its electricity market in 1999. This allowed companies like Enron to trade in wholesale electricity just like natural gas, heating oil and other commodities. In 2000-2001, California experienced widespread electricity shortages (California natives might recall blackouts and brownouts) and rapid price increases for consumers and businesses. Several utilities (for example, PG&E) filed for bankruptcy. Enron was suspected of manipulating wholesale electricity prices by using a variety of trading practices such as hoarding electricity and selling when prices were high. This resulted in the FERC investigating Enron’s trading actions in the wholesale electricity market.

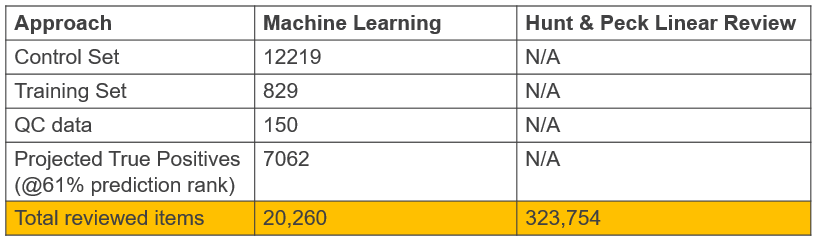

The publicly available Enron dataset contains over 459,000 emails, emails with attachments and unattached spreadsheets, Word and PowerPoint documents. After culling based on date ranges, sender domain/addresses, file type and document sizes, our simulated regulator would have been able to reduce the data to be reviewed to about 323,000 reviewable items. At this point, the “hunt & peck” approach would involve the regulator searching for evidence using criteria such as

- “ALL THESE WORDS: California;

- ANY OF THESE WORDS: wholesale, electricity, power, trading;

- NOT THESE WORDS “Natural gas”.

The machine learning approach (based on Veritas eDiscovery Platform’s patented Transparent Predictive Coding approach) would involve training the software on a few hundred items, with a good balance of relevant versus non-relevant evidence. Once trained, the software can predict relevance on un-reviewed items. This is a gross simplification of the workflow used in practice to help us focus on the results we observed.

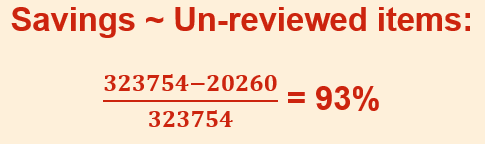

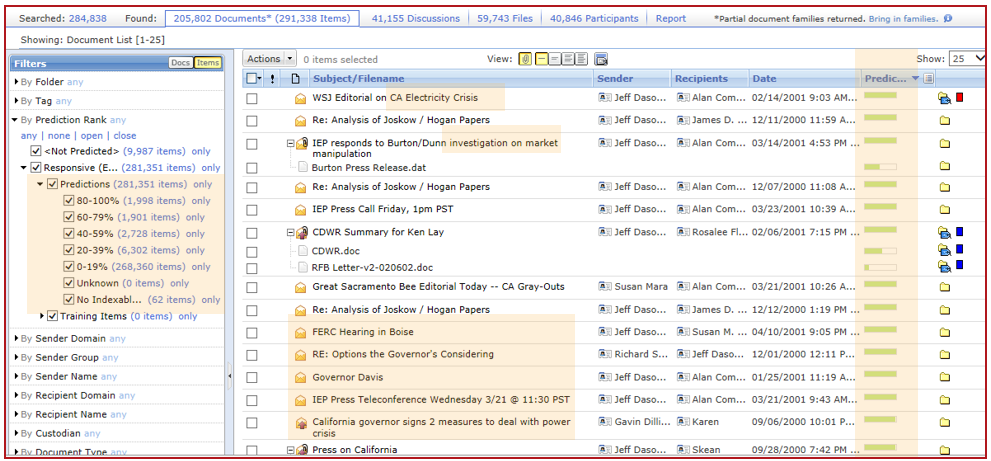

The screenshot below shows documents and their machine-generated prediction scores - higher scores indicate greater relevance. At a glance, machine learning has found content related to the California crisis emailed around by Enron employees; emails related to Governor Davis’ actions, the FERC investigation itself; emails from/to Jeff Dasovich (VP of Regulatory Affairs at Enron) and so on. Emails may be predicted higher than contained attachments (and vice-versa) allowing reviewers to get to important evidence quickly – a key feature of our software.

If this has piqued your interest in our machine learning features, give us a shout. We would be happy to talk to you about your eDiscovery needs.

Footnotes:

* the product brand is now Veritas eDiscovery Platfom

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Accelerator V3 – Faster and more efficient Virtual Machine backups – Powered by ReFS Block Cloning in Protection

- The Backup Exec 22.2 Beta Program - Register Now! in Backup Exec

- Backup Exec is an Easy Install, 4 Things you Ought to Know in Backup Exec

- Resiliency and Data Mobility in AWS with Veritas Alta™ Application Resiliency in Availability

- Meet the Veteran – Terry Pollard, Senior Director Technical Support in Inside Veritas