- VOX

- Veritas Alta™ Group

- Blog

- NetBackup Cloud Auto-Scaling

NetBackup Cloud Auto-Scaling

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

As a customer who is deploying and hosting her own data protection software, the last two things she would want to do is (a) remember to account for configuration and customization of data protection during decisions related to new IT applications and (b) ensure that data protection software is configured to take care of growth – be it seasonal or organic. While in traditional environments these could be the norm, but in cloud environments – public or private – these are deviations. Data protection software is also expected to conform to the basic criteria which prompted the IT applications and data to move to the cloud – freedom from infrastructure management, on-demand scale, and tighter control over IT operational expenses. After all, the data protection software is nothing but another IT application – albeit a critical one in the infrastructure category.

What makes the backup administrators dependent on data protection software? The first reason is obvious – it’s the same as on-premises. Every time a new application or virtual machine is added to the portfolio, the corresponding data protection planning – for storage classification, backup, and recovery SLA, etc. – must be incorporated. Though labels and tags have come in handy for automation during protection, this has given rise to the second reason.

The second reason is the infrastructure used by the data protection software. Infrastructure here is, compute resources to do the backup and restore, network to reach the workload, roles, and permissions to access the workload. Infrastructure awareness is critical in the cloud (or in any chargeback model), and it is distinctly different from a traditional data center setting. That is because, you want to pay for what you use and if you pre-provision, you pay for what you don’t use. Tag and label-based intelligent selections aggravate the problem by making the scope of protection dynamic and unpredictable.

Let’s take an example. When Gary sets up the data protection environment for his organization in their current Azure subscription, his protection plan is based on two fairly static applications that exist in the subscription today spanning across 15 VMs. Gary has been given the heads-up by application owners that the application footprints may grow soon because they are in the process of being onboarded from being on-prem applications to load-aware dynamically scalable applications – spanning a few VMs in steady-state but more when under stress. So, there is a planned growth and the possibility of an unplanned spike. Gary negotiates a set of tags with the application administrators to take advantage of intelligent asset groups.

Armed with the information, Gary sets up Asset Groups for each application, which he subscribes to the protection plans. So, protection is taken care of, or so he thinks!

In reality, as days go by, more subscriptions will be added; more applications will be onboarded; existing applications will scale out. However, backup windows are expected to remain constant – in 24x7 follow the sun IT operations environments, there are other important things to be done. And, costs are expected to remain predictable,

So, how does Gary ensure that he doesn’t have to pre-provision compute resources for data protection to accommodate the maximum that his applications may demand? How does he ensure that the backup and restore SLA are met irrespective of how many simultaneous VMs show up on any given day? And most important, how does he adhere to the maximum allowed operations cost for backup/restore activities – the balance between operational targets and cost – without blowing up his yearly budget in July?

In NetBackup 9.1, Gary will get all the above. Gary will not only be able to deploy the cloud-integrated components for true scale-out of backup and recovery but will also control the maximum usage of his cloud infrastructure.

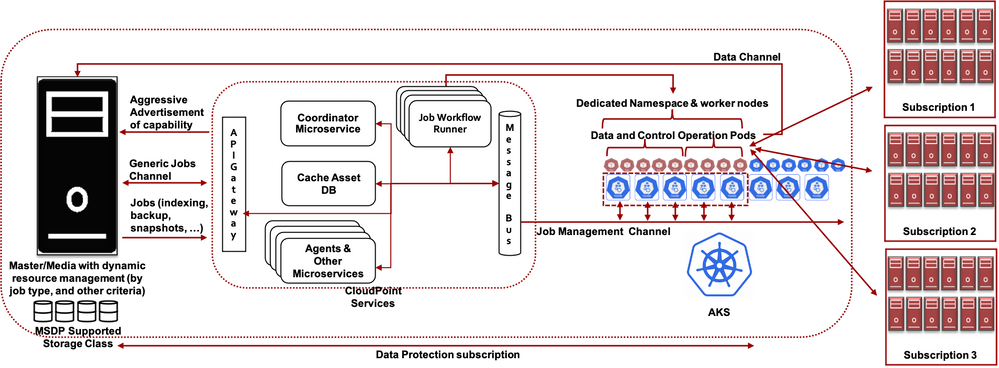

The crucial component of this architecture is the kubernetes clusters NetBackup can attach to. In doing so, the backup and recovery services can scale-out on demand into a dedicated namespace, up to the maximum allocation of workers hosts for that namespace. The cloud controller of NetBackup – CloudPoint – evaluates the job capacity of the worker hosts and advertises maximum capacity and the custom auto-scaler ensures that the only the required number of worker hosts are provisioned as per need and shutdown when no longer needed.

Let’s make our example concrete. Let’s say, Gary needs a plan to complete 500 cloud snapshot & backup jobs in a three-hour backup window, with a maximum concurrent jobs limit of 100. However, the current protection set is 15 VMs. Instead of provisioning a worker host of capacity to run 100 jobs, Gary can pick worker hosts of capacity to run 25 snapshot backup jobs and designated a kubernetes cluster namespace for a maximum of four such worker hosts.

This will take care of maximum concurrency as the cloud controller will detect and advertise capacity as 100 concurrent jobs and NetBackup control plane will schedule backup jobs keeping this number in mind. The custom auto-scaler will increase or reduce worker hosts based on actual demand – and will never be able to exceed 4 worker hosts by virtue of restrictions set by Gary (or the k8s cluster administrator) and no idle worker host will be kept running except for 1, which will take care of the once-in-a-while instant backups and restores.

So, now Gary has the flexibility to keep perfect balance between his cost targets and operational targets. As needed, he may increase or decrease the number of allocated worker hosts; NetBackup will detect and adjust job scheduling accordingly. Even for complex multi-account environments where the backup and recovery from snapshots is done using direct read and write APIs for snapshots, setting up and maintaining the data protection infrastructure remains predictable and hassle free.

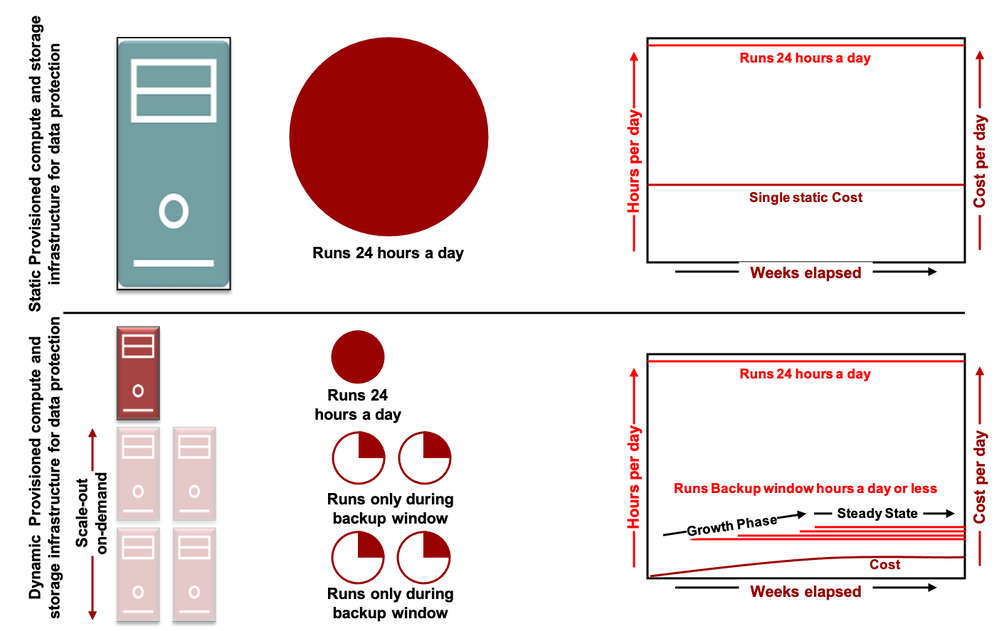

In addition to the tremendous flexibility, let’s also look at cost savings aspects keeping all other things constant and assuming a backup window for ongoing backups at 3 hours per day.

Static provisioning of data protection infrastructure (compute and storage) to accommodate future known requirement of 100 concurrent jobs as compared to current requirement of 15 concurrent jobs, is more than 80% costlier than the auto-scaling NetBackup 9.1 offers. And even after the current requirement reaches the steady state of 100 concurrent jobs, 66% cost savings is realized by auto-scaling offered by NetBackup 9.1.

Data protection in the cloud does not have to be a compromise between cost and features. With NetBackup 9.1, the savings comes from two aspects. One, running the required capacity instead of maximum capacity. Second, running it for the required time instead of all the time. Hence, backup your cloud workloads to deduplicated storage without pre-provisioning resources and pay for what is been used. Refer to NetBackup documentation for more details.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.