- VOX

- Technical Blogs

- Insights

- Veritas Information Studio: a technical deep dive

Veritas Information Studio: a technical deep dive

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

With Information Studio, Veritas provides IT professionals a tool to easily identify specific details about data and the information it contains, pinpointing areas of risk, waste, and potential value.

Information Studio offers clear visibility, targeted analysis, and informed action on data, so organizations can confidently address security concerns, oncoming regulations, and continued data growth—ultimately improving end-to-end efficiency in data management and regaining control.

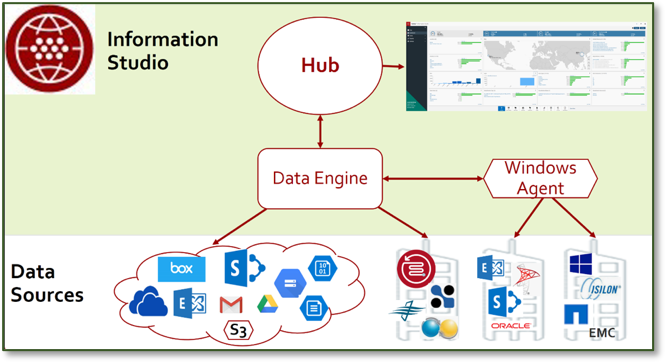

The Information Studio hub and data engine

Information Studio contains two major components—the hub and the data engine. The hub is responsible for management, maintaining configuration details, managing jobs, storing data, logging and reporting. And the data engine is responsible for connecting with data sources, topology discovery, metadata collection, classification and sending data to the hub.

The data engine includes connectors for many data sources, however on-premise versions of Microsoft SharePoint, Exchange, SQL, Oracle and CIFS shares require an agent be installed on a separate Windows VM that resides within the same local network as the data source. Nothing is required to be installed on any of the data sources.

Let’s get technical: how does it work?

Data sources are connected

To begin, an authorized user provides credentials and connection details for the data sources; this information is stored securely in the Hub. Information Studio includes connectors to a number of data sources, and some of these connectors run natively in the data engine while others are installed as an agent run in a Windows VM.

Native Data Engine Connectors:

Cloud Data Sources:

- Box for Enterprise

- Google Gmail, Drive and Cloud Platform

- Microsoft Azure

- Office 365 (Microsoft Exchange Online, SharePoint Online and OneDrive)

- S3-compatible storage

On-Premise Data Sources:

- Veritas NetBackup

- OpenText LiveLink

- OpenText Documentum

- IBM FileNet

Windows Agent Connectors:

- Microsoft Exchange

- Microsoft SQL Server

- Microsoft SharePoint

- Oracle Database

- CIFs Servers including:

- Windows file server

- NetApp (7-Mode and clustered ONTAP)

- EMC Celerra/VNX

- Isilon

Topology is discovered

A discovery policy containing a schedule is assigned to a data source. The scheduler on the Hub sends the appropriate data engine a request to discover the topology on the data source. The data engine then connects with the data source, discovers the topology, and sends that information back to the Hub which stores it within its database.

Data is scanned and metadata is collected

A scan policy containing a schedule is created for a data source. The scheduler on the Hub sends the appropriate data engine a request to start a scan on the data source. The data engine connected to the data source captures metadata, such as create, access, and modification time, as well as file path. It also captures user defined tags from O365 data sources. It performs a differential operation which ignores unchanged data and normalizes the format into a standard JSON format, pushing this information to the Hub. The Hub indexes this information and stores it in its database.

Running classification

Information Studio can find other important information such as which data has regulatory requirements or contains personally identifiable information (PII). To simplify this, Information Studio comes with more than 700 preconfigured data classification patterns and more than 110 policies to identify common data privacy and regulatory compliance principles. Veritas regularly adds to and updates these patterns and policies to support new and changing compliance regulations.Below are some examples of these preconfigured policies grouped by regulation type:

- Corporate Compliance: authentication, “company confidential” and IP, ethics and code of conduct, IP addresses, PCI-DSS and proposals/bids.

- Financial Regulations: bank account numbers, credit card numbers, GLBA, SOX, SWIFT Codes and U.S. financial forms.

- Health Regulations: Australia Individual and Canada Healthcare Identifier, IDC 10 CM diagnosis indexes, medical record numbers, U.S. DEA numbers and U.S. HIPPA.

- International Regulations: Australia/Canada/U.K./U.S. driver’s license and passport numbers, Australia tax, U.K. Unique Tax Reference (UTR), U.S. Social Security number and Taxpayer ID, Canada SIN, France National ID, Italy Codice Fiscale and Switzerland National ID.

- Personally Identifiable Information (PII) from over 35 countries.

- Sensitive data policies from over 35 countries.

- S. State Regulations: Criminal history, FCRA, FERPA, FFIECE, FISMA, IRS 1075 or SE.)

U.S. Federal Regulations: California Assembly Bill 1298 (HIPPA), California Financial Information Privacy Act (SB1) and Massachusetts regulation 201 CMR 17.00 (MA 201 CMR 17).In the event it’s important to identify files with other specific pattern matches, custom policies can be created. Custom policies can contain any number of conditions. Conditions can look for multiple words, phrases or regular expressions, keywords and lexicons and use wildcards and NEAR and BEFORE operators to include proximity.

Within the Information Studio UI an authorized user can select which classification policies are relevant to them and thus they want enabled and on what data sources.

A classification policy containing a schedule is created for a data source. The asset service on the Hub queries the indexed data to create a list of files that haven’t been classified since the last modification time. It also filters it for inclusion and exclusion rules contained within the policy. The filtered output list of items is sent from the Hub to the appropriate data engine along with a request to start classification on those specific items.

The data engine reads the items from the data source and temporarily stores a local copy. The data engine’s classifier will read the text from these files and looks for matches to the conditions within the classification policies that are enabled. Each match it finds adds a classifier tag that Information Studio associates with the file. This tag is sent to the hub where it is stored in a database and indexed. After classification is complete the file will be deleted from the data engine’s local storage.

Note: Classification can be run on data within Microsoft SharePoint on-premise, Office 365 OneDrive or a CIFS share. These CIFS shares can be hosted anywhere, and examples of supported CIFS shares include Windows file servers, NetApp filers, EMC Celerra, VNX and Isilon.

Generating reports

Information Studio displays the information it captures about data in your infrastructure in the UI. The information can be seen in a series of graphs which allow users to easily see where their data exists in a map view, and also groups similar data.

These filtered reports can be used to perform informed actions. As an example, it can be filtered for all files containing PII that are more than 2 years old contained within the EU. With this list of items an authorized user can defensibly delete these files thus reducing risk and increasing storage efficiency within their infrastructure.

The reports also identify orphaned, stale and non-business data and each of their associated costs.

- Orphaned data represents data that no longer has an owner associated within the company.

- Stale data represents items that are older than a configurable amount of time.

- Non-business-related data that is identified by configurable values such as video, audio, game, etc.…

Once identified this data can be managed appropriately, such as defensibly deleted or stored on lower cost archive storage.

Information Studio architecture

Information Studio runs as a virtual appliance in a VMware ESX server. Its software runs in Docker containers that are managed by Kubernetes, and contains two major components, the hub and the data engine.

The hub is responsible for management via UI, maintaining configuration details, managing jobs, storing data, logging and reporting. Information Studio uses various Infrastructure Foundation Services to store and manage data on the platform. These services provide the ability to visualize the information gathered about the data in your infrastructure.

The data engine is responsible for connecting with data sources, topology discovery, metadata collection, classification and sending data to the hub. The data engine contains connectors for many data sources, however on-premises versions of Microsoft SharePoint, Exchange, SQL, Oracle and CIFS shares require an agent be installed on a separate Windows VM that resides within the same local network as the data source.

Security is built into both the hub and data engine. Passwords are encrypted in PostgreSQL in the Hub. Communication between hub and data engine are encrypted using SSL.

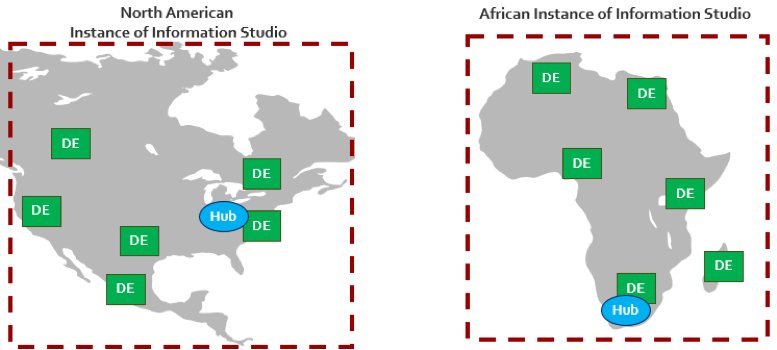

Information Studio is deployed as a single OVA containing both the hub and data engine roles. The minimum configuration is one virtual machine that runs both the Hub and Data Engine. However, in a typical configuration there will be one Hub and one or more data engines. Since there is a lot of data transferred between a data source and its data engine, it is recommended to ensure they are located where there is good network bandwidth between them. Having one Hub provides centralized management and control. Having multiple data engines optimizes WAN bandwidth.

Please join me at veritas.com/infostudio for additional details.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Power-up ransomware resiliency with retention lock/WORM in Protection

- Role-based Access Control (RBAC) in Backup Exec in Protection

- Anomaly Detection in Backup Exec in Backup Exec

- Ransomware Resilience in Backup Exec in Backup Exec

- 10 Minutes to Get Your Backup and Recovery Jobs Running Again After a Site Failure in Protection