- VOX

- Technical Blogs

- Availability

- Shared Nothing - A primitive SAN

Shared Nothing - A primitive SAN

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

'Shared Nothing' architecture is not a new concept that is going to phase out the SAN based architecture. It’s just a precursor to the latter.

Here is a brief look at the evolution of the two architectures that would make this point obvious…

Storage Area Network

'Storage Area Network' is a dedicated network for providing access of block storage devices to a set of servers or hosts. Let’s see how this has evolved over the years...

Initially storage devices came bundled with the hosts and this posed challenges when the host had to undergo a hardware maintenance shutdown in order to add more storage. Also the number of storage devices that can be connected to the internal SCSI bus was limited. This brought in the concept of direct attached storage where the host and the storage were separate physical boxes connected by a cable (initially parallel SCSI and then Fibre Channel). The storage box was just a bunch of disks (JBOD) having a controller that extended the SCSI bus over a SCSI/FC transport.

With the advent of parallel applications, there emerged a need for shared storage and thus the storage box started to have multiple SCSI/FC interfaces. This allowed 2-4 hosts to share storage however we could not have more than one cluster with over 2 nodes connected to the same storage. Therefore this brought in switch SAN fabric that allowed many-to-many mapping between hosts and storage and these could be dynamically altered. The concepts of Host Zoning and LUN masking allowed the SAN administrator to create a virtual sandbox between the host(s) and the storage that belonged to the same application.

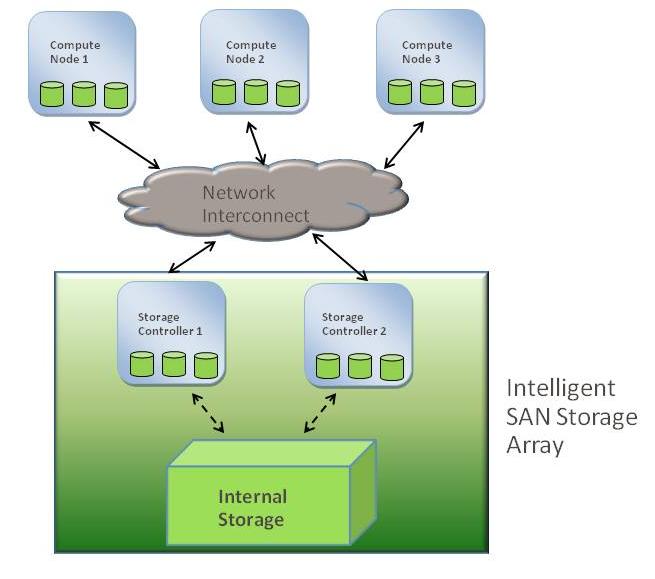

As storage arrays started to cater to large number of hosts, the storage controllers became as intelligent and as powerful as the host and virtualized a set of storage devices or storage arrays to provide advanced storage management capabilities like mirrors for resiliency, striping & caching for performance, tier-ing for intelligent use of classes/types of storage, snapshots, replication etc. The host only saw a virtualized storage device known as a Logical Unit or LUN.

For load balancing and resiliency considerations storage arrays began to have multiple storage controllers that form a cluster and manage the same set of storage. In order to create horizontal scaling, high end expensive storage arrays employed distributed caching to provide concurrent access to a LUN from any of the storage controllers. While other less expensive arrays started employing the concept of LUN ownership for storage controllers where any given storage controller maintained preferential access for a LUN while the other storage controller delegated requests issued for the LUN to the preferred storage controller over a high speed internal interconnect.

Shared Nothing Cluster

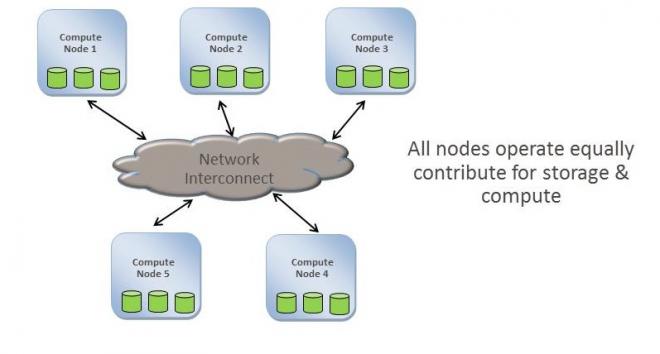

A 'Shared Nothing' cluster is a set of nodes that don’t share computing and storage resources yet behave as a single entity to the external world providing the same service. This architecture has gained popularity in recent times as it allows horizontal scaling, achieved just by addition of new nodes to the cluster, without requirement of any downtime and re-configuration.

A interconnect between the nodes is used not only to share cluster configuration data but also for I/O traffic. Since the interconnect of choice for clusters was originally Ethernet or Infiniband, the same channel is used to send I/O traffic as well. A switched Ethernet or Infiniband fabric is used to allow direct communication between nodes.

In order to maintain resiliency across failures, each node replicates the local node storage across two or more nodes and the re-balancing of copies of data happens dynamically as nodes leave or join the cluster. All copies can be remote in cases where the node’s local storage is exhausted.

Therefore for to servicing an I/O request, the CPUs of the servicing node where the application software is running and that of the nodes where the data is located needs to be used. Such I/O intensive and N/W intensive work on the remote CPUs can cause un-deterministic response times for application I/O on the remote CPU.

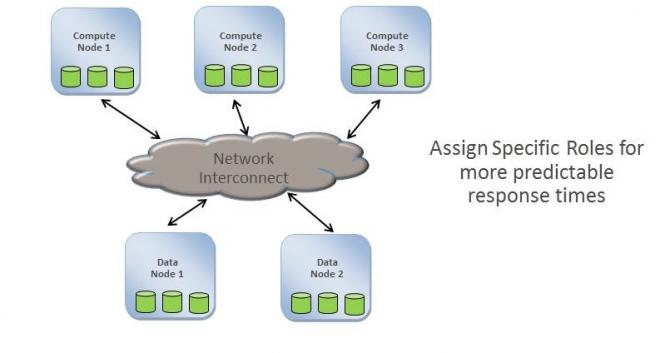

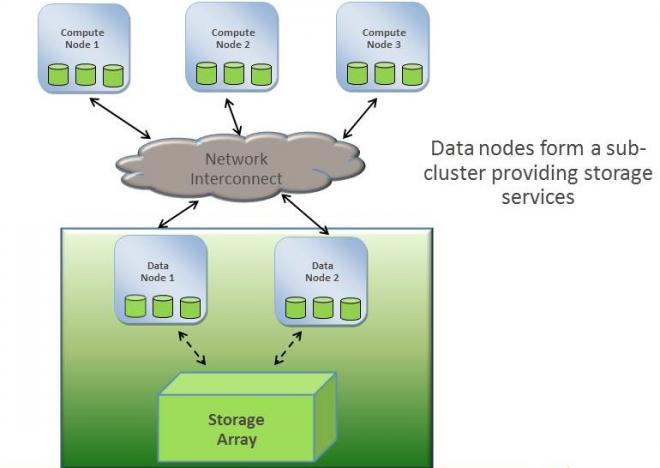

To address such problems, the shared nothing cluster is altered to have a few nodes in the cluster focus on computation and while others focus on data access and storage management functions.

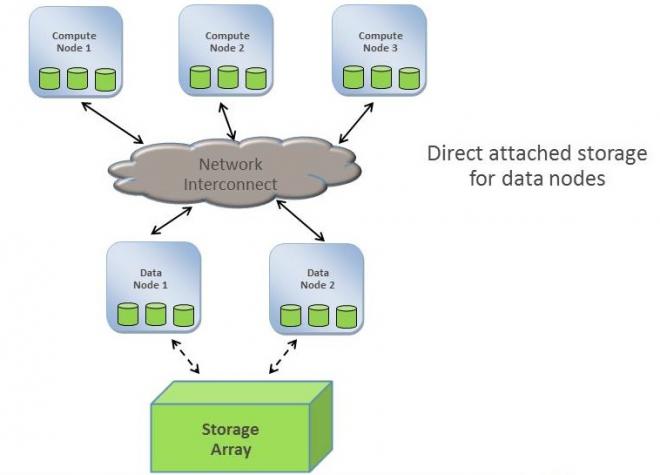

Since internal storage alone cannot meet the growing capacity need for applications, the data access nodes would require opting for direct attached storage or probably a local SAN so as to minimize downtime for upgrading the storage infrastructure.

Soon there would be a need to have specialized management model for the data nodes which is different from the compute nodes as the former start to offer a variety of services and also serving multiple clusters so as to get a better return on investment.

The Comparison

If you look at the where the two sections above concluded, we can easily see that the two architectures are pretty similar. A host or computation node CPU interacts with one of the storage node CPU to gain access to the storage that is resilient, gives good performance and allows shared access to multiple computation nodes.

However the Shared nothing clusters have not yet matured as the SAN. Therefore the concepts that came up over the years for SAN such as masking/zoning would soon start to emerge in shared nothing environments as folks would like to deploy multiple clusters on the same shared interconnect but would like to isolate the traffic for both security and interference avoidance purposes.

Also when the shared nothing interconnect starts to see large amount of traffic, with the increase in number of computation nodes, it would face the same scalability challenges as that of SAN. This is when solutions such as Symantec Dynamic multipathing that is fine tuned for performing optimally in the SAN environment come handy even for shared nothing clusters.

Multi-pathing is a concept of providing highly available, load balanced access to resources and it need not be tied to only the SAN domain. It can be employed in shared nothing clusters as well where there are multiple access paths to the same data node on any interconnect – FC, iSCSI, Infiniband etc.

An easy, out of the box implementation a shared nothing cluster is to employ the target mode of standard Qlogic/Emulex Fibre channel HBA or the iSCSI target driver available in various operating systems on the data nodes to expose local storage to remote compute nodes. Then the multi-pathing solution on the compute node will take care of providing resilient access to the storage exposed by the data node.

Conclusion

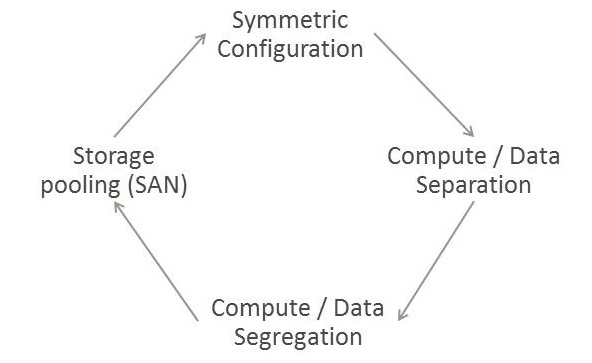

In conclusion we can see that 'Shared Nothing' as a concept is not new and the SAN based architecture has evolved from it. Looking at the various stages in the evolution of the SAN we can see a cyclic pattern and right now we have just completed one full circle and are on the verge of the next ![]()

I would like to hear back on this view of mine, so please feel free to post your thoughts and feedback...

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.