- VOX

- Data Protection

- NetBackup

- Slow NBD performance when backing up VMs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-15-2023 10:52 PM

Hello,

We are using VMware vSphere 7 running on Cisco UCS blade system. VMDK data storage is NFS on a Netapp AFF A400 storage system. NetBackup 10.1 media server is running on RHEL8 in a VM and has BasicDisk NFS mounted volumes from a Netapp FAS 2720 storage system. From there backup image duplication is done off-site to a HP media server with StorageTek LTO5 tape library. All network uplinks are minimum 2 x 10 Gb/s and MTU 9000.

We used to have classic NetBackup via agent on VMware guest OS and switched now to VMware direct backup using NBD over LAN. We don't have a SAN. NetBackup VMware policies are configured with Java admin console.

Backup speeds from VMware to disk are just around 10'000-20'000 KB/s. Netapp storage system and network is not the bottleneck as direct copy jobs are way faster.

Where could the bottleneck be? What am I missing?

Thanks in advance.

Regards,

Bernd

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-02-2023 11:48 PM

Found this one here ...

https://kb.vmware.com/s/article/83401

Maybe the Netapp FAS 2720 is not the fastest for write operations as NFS BasicDisk backup target ...

fas2720::*> statistics disk show -sort-key operation_latency

fas2720 : 7/3/2023 08:14:48

Busy Total Read Write Read Write *Latency

Disk Node (%) Ops Ops Ops (Bps) (Bps) (us)

----- --------- ---- ----- ---- ----- ------- ----- --------

1.3.5 adncl3-01 43 52 51 0 1773568 1024 24856

1.2.11

adncl3-01 50 52 52 0 1957888 2048 21840

1.2.7 adncl3-01 48 57 57 0 2040832 0 21415

1.4.9 adncl3-01 52 65 65 0 2387968 1024 21167

1.5.7 adncl3-01 47 50 50 0 2055168 0 20383

1.3.7 adncl3-01 51 68 68 0 2185216 0 19792

1.3.9 adncl3-01 45 57 57 0 2135040 0 19122

1.3.11

adncl3-01 44 52 52 0 2073600 0 19058

1.1.11

adncl3-01 44 57 57 0 2018304 21504 18197

1.4.7 adncl3-01 43 54 54 0 2242560 3072 18115

1.5.9 adncl3-01 44 60 59 0 2264064 2048 16991

1.2.9 adncl3-01 43 62 62 0 2221056 3072 16816

1.0.3 adncl3-01 24 72 69 2 2379776 175104

15037

1.1.9 adncl3-01 45 69 68 0 2337792 1024 14815

1.5.1 adncl3-01 33 58 57 0 1985536 4096 13554

1.1.5 adncl3-01 31 54 53 0 1774592 2048 13319

1.4.1 adncl3-01 34 58 58 0 1604608 3072 13197

1.3.3 adncl3-01 32 54 54 0 1701888 10240 13128

1.2.5 adncl3-01 32 53 53 0 1820672 0 12883

1.4.5 adncl3-01 33 63 62 0 2308096 10240 12650

1.5.5 adncl3-01 32 52 52 0 2238464 1024 12607

1.4.3 adncl3-01 32 53 53 0 1920000 2048 12573

1.5.3 adncl3-01 35 59 59 0 2415616 2048 12140

1.2.3 adncl3-01 31 53 53 0 1628160 0 11767

1.1.3 adncl3-01 30 55 55 0 2255872 0 11027 Compared to Netapp AFF A400 all-flash system for VMware VMDK disk storage.

affa400::*> statistics disk show -sort-key operation_latency

affa400 : 7/3/2023 08:32:56

Busy Total Read Write Read Write *Latency

Disk Node (%) Ops Ops Ops (Bps) (Bps) (us)

----- --------------- ---- ----- ---- ----- -------- -------- --------

1.1.0 Multiple_Values 0 387 342 44 11252736 10230784 152

1.0.4 Multiple_Values 0 431 373 58 12254208 11289600 150

1.1.21

Multiple_Values 0 433 377 56 12266496 11183104 141

1.0.17

adncl2-02 0 427 294 132 6013952 6899712 136

1.0.16

Multiple_Values 0 788 654 134 11297792 6376448 124

1.0.0 Multiple_Values 0 404 357 46 11068416 10337280 124

1.0.19

Multiple_Values 0 809 667 142 10535936 6147072 122

1.0.14

Multiple_Values 0 797 648 149 11408384 6798336 122

1.1.3 Multiple_Values 0 422 287 134 5437440 5969920 120

1.0.11

Multiple_Values 0 834 678 156 12203008 6723584 118

1.0.1 Multiple_Values 0 799 647 152 11014144 5924864 118

1.1.5 Multiple_Values 0 772 639 133 11672576 5780480 117

1.1.18

Multiple_Values 0 445 308 137 5679104 5788672 117

1.0.3 Multiple_Values 0 791 655 135 12166144 5995520 117

1.0.12

Multiple_Values 0 803 662 141 11409408 6824960 117

1.0.9 Multiple_Values 0 726 606 119 11119616 6731776 116

1.1.1 Multiple_Values 0 815 667 148 10887168 6126592 112

1.1.20

Multiple_Values 0 742 613 129 10509312 5449728 111

1.1.19

Multiple_Values 0 795 648 147 11213824 5847040 111

1.0.8 Multiple_Values 0 814 659 154 10331136 5297152 111

1.1.23

Multiple_Values 0 756 638 118 11285504 6646784 110

1.1.2 Multiple_Values 0 780 633 147 10660864 6048768 110

1.1.4 Multiple_Values 0 795 638 156 11356160 6163456 109

1.1.22

Multiple_Values 0 759 627 132 10919936 6013952 109

1.0.7 Multiple_Values 0 782 632 149 11244544 6273024 105

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-16-2023 12:06 AM

Ah, HotAdd also works with NFS datastores. They just need all to be mounted on the ESXi server where the media server virtual machine is running. That is around 10x faster than NBD.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-19-2023 01:23 AM

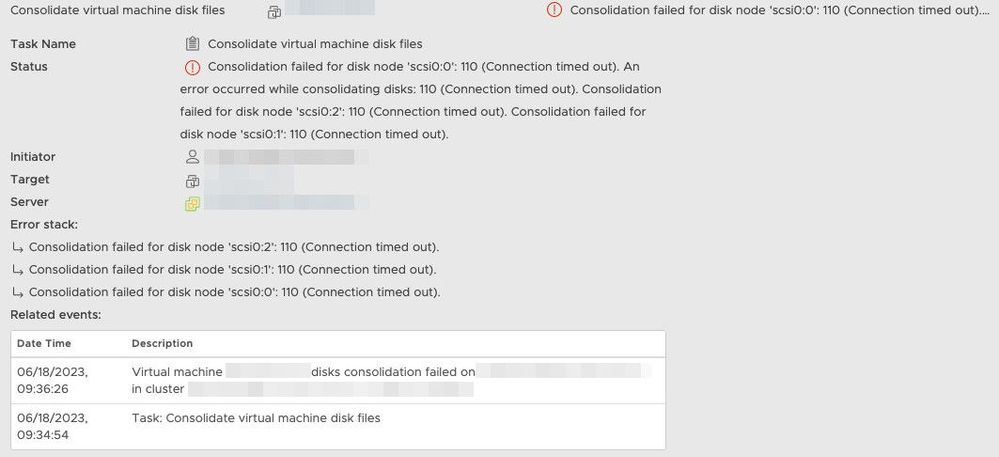

Just had an incident over weekend where the backup media server could not unmount the virtual disk files of the backup client. So the snapshot consolidation of the client filed with timeout errors.

So, the HotAdd transport method is 10x faster but evil. :(

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-29-2023 01:45 AM

Any ideas how NBD speed can be improved? Netapp NFS storage and network is not the bottleneck and also not CPU/memory of the media server VM.

I looked at Replication Director that it can backup VMs using Netapp Snapshots/SnapMirror, but I could not see that the backup images then can be staged to tapes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-02-2023 11:48 PM

Found this one here ...

https://kb.vmware.com/s/article/83401

Maybe the Netapp FAS 2720 is not the fastest for write operations as NFS BasicDisk backup target ...

fas2720::*> statistics disk show -sort-key operation_latency

fas2720 : 7/3/2023 08:14:48

Busy Total Read Write Read Write *Latency

Disk Node (%) Ops Ops Ops (Bps) (Bps) (us)

----- --------- ---- ----- ---- ----- ------- ----- --------

1.3.5 adncl3-01 43 52 51 0 1773568 1024 24856

1.2.11

adncl3-01 50 52 52 0 1957888 2048 21840

1.2.7 adncl3-01 48 57 57 0 2040832 0 21415

1.4.9 adncl3-01 52 65 65 0 2387968 1024 21167

1.5.7 adncl3-01 47 50 50 0 2055168 0 20383

1.3.7 adncl3-01 51 68 68 0 2185216 0 19792

1.3.9 adncl3-01 45 57 57 0 2135040 0 19122

1.3.11

adncl3-01 44 52 52 0 2073600 0 19058

1.1.11

adncl3-01 44 57 57 0 2018304 21504 18197

1.4.7 adncl3-01 43 54 54 0 2242560 3072 18115

1.5.9 adncl3-01 44 60 59 0 2264064 2048 16991

1.2.9 adncl3-01 43 62 62 0 2221056 3072 16816

1.0.3 adncl3-01 24 72 69 2 2379776 175104

15037

1.1.9 adncl3-01 45 69 68 0 2337792 1024 14815

1.5.1 adncl3-01 33 58 57 0 1985536 4096 13554

1.1.5 adncl3-01 31 54 53 0 1774592 2048 13319

1.4.1 adncl3-01 34 58 58 0 1604608 3072 13197

1.3.3 adncl3-01 32 54 54 0 1701888 10240 13128

1.2.5 adncl3-01 32 53 53 0 1820672 0 12883

1.4.5 adncl3-01 33 63 62 0 2308096 10240 12650

1.5.5 adncl3-01 32 52 52 0 2238464 1024 12607

1.4.3 adncl3-01 32 53 53 0 1920000 2048 12573

1.5.3 adncl3-01 35 59 59 0 2415616 2048 12140

1.2.3 adncl3-01 31 53 53 0 1628160 0 11767

1.1.3 adncl3-01 30 55 55 0 2255872 0 11027 Compared to Netapp AFF A400 all-flash system for VMware VMDK disk storage.

affa400::*> statistics disk show -sort-key operation_latency

affa400 : 7/3/2023 08:32:56

Busy Total Read Write Read Write *Latency

Disk Node (%) Ops Ops Ops (Bps) (Bps) (us)

----- --------------- ---- ----- ---- ----- -------- -------- --------

1.1.0 Multiple_Values 0 387 342 44 11252736 10230784 152

1.0.4 Multiple_Values 0 431 373 58 12254208 11289600 150

1.1.21

Multiple_Values 0 433 377 56 12266496 11183104 141

1.0.17

adncl2-02 0 427 294 132 6013952 6899712 136

1.0.16

Multiple_Values 0 788 654 134 11297792 6376448 124

1.0.0 Multiple_Values 0 404 357 46 11068416 10337280 124

1.0.19

Multiple_Values 0 809 667 142 10535936 6147072 122

1.0.14

Multiple_Values 0 797 648 149 11408384 6798336 122

1.1.3 Multiple_Values 0 422 287 134 5437440 5969920 120

1.0.11

Multiple_Values 0 834 678 156 12203008 6723584 118

1.0.1 Multiple_Values 0 799 647 152 11014144 5924864 118

1.1.5 Multiple_Values 0 772 639 133 11672576 5780480 117

1.1.18

Multiple_Values 0 445 308 137 5679104 5788672 117

1.0.3 Multiple_Values 0 791 655 135 12166144 5995520 117

1.0.12

Multiple_Values 0 803 662 141 11409408 6824960 117

1.0.9 Multiple_Values 0 726 606 119 11119616 6731776 116

1.1.1 Multiple_Values 0 815 667 148 10887168 6126592 112

1.1.20

Multiple_Values 0 742 613 129 10509312 5449728 111

1.1.19

Multiple_Values 0 795 648 147 11213824 5847040 111

1.0.8 Multiple_Values 0 814 659 154 10331136 5297152 111

1.1.23

Multiple_Values 0 756 638 118 11285504 6646784 110

1.1.2 Multiple_Values 0 780 633 147 10660864 6048768 110

1.1.4 Multiple_Values 0 795 638 156 11356160 6163456 109

1.1.22

Multiple_Values 0 759 627 132 10919936 6013952 109

1.0.7 Multiple_Values 0 782 632 149 11244544 6273024 105

- Is Partial success a concern? Can restore back any files due to partial success backup jobs? in NetBackup Appliance

- Want to add synology NAS Box in our netbackup in NetBackup Appliance

- NetBackup 10.4 Gives AWS Users MORE Security Options with STS Support! in NetBackup

- Duplication to tape via BYO media server opinion. in NetBackup Appliance

- Attention DBAs! New with NetBackup 10.4, PostgreSQL Recovery to PIT in Hours/Minutes/Seconds!! in NetBackup