- VOX

- Data Protection

- NetBackup

- Deduplication - impacts of data the is already com...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-27-2023 03:51 PM - edited 09-27-2023 03:54 PM

Is this still a significant problem? Had a quick look at the doco on deduplication and there is no mention of if.

In the past vendors including Veritas/Symantec warned that data that is already compressed and/or encrypted would result in poor deduplication performance, the same with large numbers of tiny files files. Compressed data includes stuff like audio files (mp3, wma, ogg etc), video files (avi, mkv, mp4 etc), image files (png, bmp, jpeg etc), PDFs, zipped files.

A while back I tested the rumour that of the gzip & pigz using the --rsyncable option is deupe friendly friendly. I found it had significant improvment in dedupe performance on commvault, and it there was only a very small size difference between the compressed files with/without the --rsyncable option.

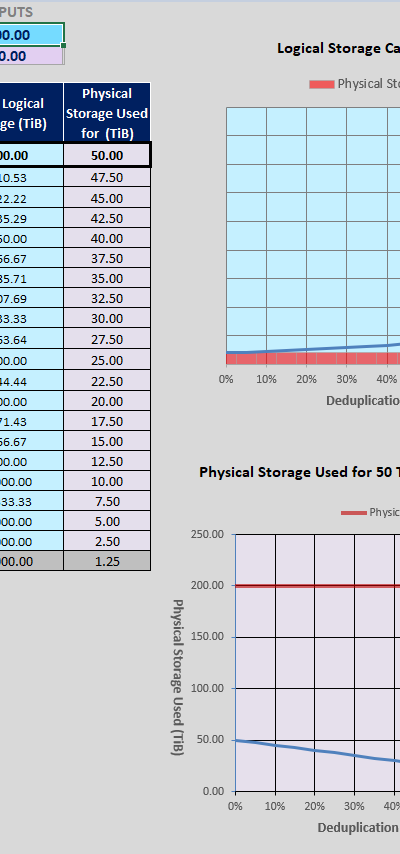

The amount data can be deduped can have a big impact on what can be logically stored.... example - click to see full view

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-28-2023 03:28 PM - edited 09-28-2023 03:30 PM

Thanks, my concern was the lack of information was the lack of documentation implying that things had changed and I was unable to provide verification to people of the impacts of file size and data that was already compressed and/or encrypted. Something I have telling people since the days of Pure Disk when it was DIY and came on DVD.

I eventually found a reference in the Backup Planning and Performance Tuning Guide Release 8.3 and later. In the most obvious place : Deduplication Guide Unix, Linux, Windows Release 10 there is no mention of Backup Planning and Performance Tuning Guide in providing additional information The dedupe guide only mentions performance being impacted by "small files"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-28-2023 01:23 AM

Hi @jm-10

Backup of the SAME compressed and encrypted files will give you a dedupe "profit" because the dedupe algorithm recognize the same unique finger prints. You will not gain any compression benefit because the files are already compress (jpeg, mpeg, compressed files). However if the files are new every day and compressed and/or encrypted, there is no gain in using dedupe storage.

Dedupe storage is best for backup data with a small to medium change rate and retention of 3-4 weeks and up, but on the other side, you want backups to expire within some months so single referenced finger prints in the MSDP pool can be expired. Bigger file size also mean better overall dedupe efficiency. This is why dedupe mileage depends on customer data.

/Nicolai

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-28-2023 03:28 PM - edited 09-28-2023 03:30 PM

Thanks, my concern was the lack of information was the lack of documentation implying that things had changed and I was unable to provide verification to people of the impacts of file size and data that was already compressed and/or encrypted. Something I have telling people since the days of Pure Disk when it was DIY and came on DVD.

I eventually found a reference in the Backup Planning and Performance Tuning Guide Release 8.3 and later. In the most obvious place : Deduplication Guide Unix, Linux, Windows Release 10 there is no mention of Backup Planning and Performance Tuning Guide in providing additional information The dedupe guide only mentions performance being impacted by "small files"